Map Slots Hadoop

ABSTRACT:

Ment in Hadoop with one master node and multiple slave nodes. The master node runs JobTracker and manages the task scheduling on the cluster. Each slave node runs TaskTracker and controls the execution of individual tasks. Hadoop uses a slot-based task scheduling algo-rithm. Each TaskTracker has a preconfigured number of map slots and reduce. These slots can be killed, as long as the TaskTracker is alive to serve map output to the reducer. It seems hadoop copes perfectly fine with TaskTrackers that have zero slots, too. If we kill all map slots, we introduce potential failure cases where a node serving map data fails, and there are no map slots to re-compute the data.

MapReduce is a popular parallel computing paradigm for large-scale data processing in clusters and data centers. A MapReduce workload generally contains a set of jobs, each of which consists of multiple map tasks followed by multiple reduce tasks. Due to 1) that map tasks can only run in map slots and reduce tasks can only run in reduce slots, and 2) the general execution constraints that map tasks are executed before reduce tasks, different job execution orders and map/reduce slot configurations for a MapReduce workload have significantly different performance and system utilization. This paper proposes two classes of algorithms to minimize the makespan and the total completion time for an offline MapReduce workload. Our first class of algorithms focuses on the job ordering optimization for a MapReduce workload under a given map/reduce slot configuration. In contrast, our second class of algorithms considers the scenario that we can perform optimization for map/reduce slot configuration for a MapReduce workload. We perform simulations as well as experiments on Amazon EC2 and show that our proposed algorithms produce results that are up to 15 _ 80 percent better than currently unoptimized Hadoop, leading to significant reductions in running time in practice.

PROJECT OUTPUT VIDEO:

EXISTING SYSTEM:

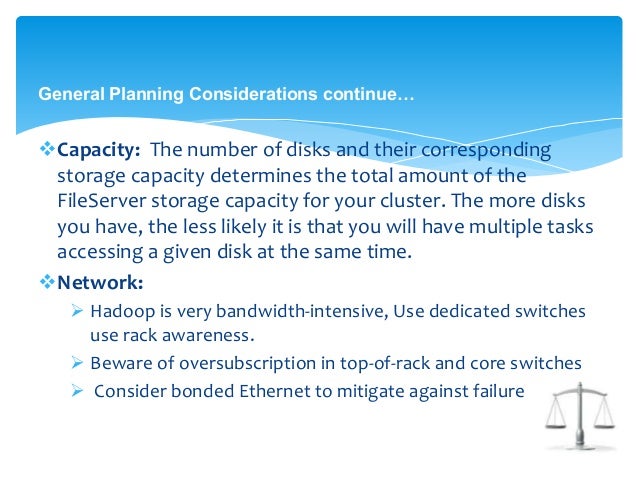

In Hadoop MRv1, it abstracts the cluster resources into slots (e.g., map slots and reduce slots). Due to varied slot demands for map and reduce tasks, different map/reduce slot configurations can also have significantly different performance and system utilization. Particularly, the importance and challenges of map/reduce slot configuration optimization are motivated.

The batch job ordering problem has been extensively studied in the high performance computing literature. Minimizing the makespan has been shown to be NP-hard, and a number of approximation and heuristic algorithms have been proposed. In addition, there has been work on bi-criteria optimization which aims to minimize makespan and total completion time simultaneously.

Moseley et al. present an offline 12-approximation algorithm for minimizing the total flow time of the jobs; this is the sum of the differences between the finishing and arrival times of all the jobs.

Verma et al. propose two algorithms for makes pan optimization. One is a greedy algorithm job ordering method based on Johnson’s Rule.

DISADVANTAGES OF EXISTING SYSTEM:

The previous works all focused on the single-stage parallelism, where each job only has a single stage.

PROPOSED SYSTEM:

In this paper, we target at one subset of production MapReduce workloads that consist of a set of independent jobs (e.g., each of jobs processes distinct data sets with no dependency between each other) with different approaches.

For dependent jobs (i.e., MapReduce workflow), one MapReduce can only start only when its previous dependent jobs finish the computation subject to the input-output data dependency. In contrast, for independent jobs, there is an overlap computation between two jobs, i.e., when the current job completes its map-phase computation and starts its reduce-phase computation, the next job can begin to perform its map-phase computation in a pipeline processing mode by possessing the released map slots from its previous job.

Have a theoretical study on the Johnson’s Rule-based heuristic algorithm for makespan, including its approximation ratio, upper bound and lower bound makespan.

Propose a bi-criteria heuristic algorithm to optimize makespan and total completion time simultaneously, observing that there is a tradeoff between makespan and total completion time. Moreover, we show that the optimized job order produced by the proposed orderings algorithms does not need to change (i.e., stable) in the face of server failures via theoretical analysis.

Propose slot configuration algorithms for makespan and total completion time. We also show that there is a proportional feature for them, which is very important and can be used to address the time efficiency problem of proposed enumeration algorithms for a large size of total slots.

Perform extensive experiments to validate the effectiveness of proposed algorithms and theoretical results.

ADVANTAGES OF PROPOSED SYSTEM:

We evaluate our algorithms using both testbed workloads and synthetic Facebook workloads.

Experiments show that, for the makespan, the job ordering optimization algorithm achieve an approximately 14-36 percent improvement for testbed workloads, and 10-20 percent improvement for Facebook workloads. In contrast, with the map/reduce slot configuration algorithm, there are about 50-60 percent improvement for testbed workloads and 54-80 percent makespan improvement for Facebook workloads;

Experiments show that, for the total completion time, there are nearly 5_ improvement with the bi-criteria job ordering algorithms and 4_ improvement with the bi-criteria map/reduce slot configuration algorithm, for Facebook workloads that contain many small-size jobs

Map Slots Hadoop Games

MODULES:

- Job ordering module

Mapping module

Reducing module

Slot configuration module

MODULE DESCRIPTION:

JOB ORDERING MODULE:

The non- overlap computation constraint between map and reduce tasks of a MapReduce job, resulting in different resource utilizations for map/reduce slots under different job submission orders for batch jobs. The job ordering optimization for MapReduce workloads is important and challenging,

(i). There is a strong data dependency between the map tasks and reduce tasks of a job, i.e., reduce tasks can only perform after the map tasks,

(ii). map tasks have to be allocated with map slots and reduce tasks have to be allocated with reduce slots,

(iii). Both map slots and reduce slots are limited computing resources, configured by Hadoop administrator in advance.

MAPPING MODULE

The different job submission orders will result in different resource utilizations for map and reduce slots and in turn different performance (i.e., makespan) for batch jobs. The computation for each map task consists of sub-phases, namely, shuffle, mapping. The shuffle phase computation of reduce tasks can start earlier before all map tasks are completed, whereas other two sub-phases cannot. A Map job execution generally exhibits the multiple waves in its map phase. Only the first wave of tasks can overlap their data shuffling with map task computation.

REDUCING MODULE:

The computation for each reduce task consists of sub-phases, sort and aggregation. The shuffle phase computation of reduce tasks can start earlier before all map tasks are completed, whereas other two sub-phases cannot. It means that only sort and aggregation phases of reduce tasks cannot overlap with all map tasks. A MapReduce job execution generally exhibits the multiple waves in its map and reduce phase. Only the first wave of reduce tasks can overlap their data sorting with map task computation. For other remaining reduce tasks, they can only start sorting after all map tasks are completed.

SLOT CONFIGURATION MODULE:

The total number of map slots plus reduces slots for the cluster be taken. The influence of slot configuration to the overall performance by running jobs in all possible map/reduce slot configurations under an arbitrary job order. Then the optimal make-span are compared with the unoptimal configuration to show the performance.

SYSTEM REQUIREMENTS:

HARDWARE REQUIREMENTS:

System : i3 Processor

Hard Disk : 500 GB.

Monitor : 15’’ LED

Input Devices : Keyboard, Mouse

Ram : 4GB.

SOFTWARE REQUIREMENTS:

Operating system : Windows 7/UBUNTU.

Coding Language : Java 1.7 ,Hadoop 0.8.1

IDE : Eclipse

Database : MYSQL

REFERENCE:

Shanjiang Tang, Bu-Sung Lee, and Bingsheng He, “Dynamic Job Ordering and Slot Configurations for MapReduce Workloads”, IEEE TRANSACTIONS ON SERVICES COMPUTING, VOL. 9, NO. 1, JANUARY/FEBRUARY 2016.

Map Slots Hadoop Game

A MapReduce application processes the data in input splits on a record-by-record basis and that each record is understood by MapReduce to be a key/value pair. After the input splits have been calculated, the mapper tasks can start processing them — that is, right after the Resource Manager’s scheduling facility assigns them their processing resources. (In Hadoop 1, the JobTracker assigns mapper tasks to specific processing slots.)

The mapper task itself processes its input split one record at a time — in the figure, this lone record is represented by the key/value pair . In the case of our flight data, when the input splits are calculated (using the default file processing method for text files), the assumption is that each row in the text file is a single record.

For each record, the text of the row itself represents the value, and the byte offset of each row from the beginning of the split is considered to be the key.

You might be wondering why the row number isn’t used instead of the byte offset. When you consider that a very large text file is broken down into many individual data blocks, and is processed as many splits, the row number is a risky concept.

Map Slots Hadoop Play

The number of lines in each split vary, so it would be impossible to compute the number of rows preceding the one being processed. However, with the byte offset, you can be precise, because every block has a fixed number of bytes.

As a mapper task processes each record, it generates a new key/value pair: The key and the value here can be completely different from the input pair. The output of the mapper task is the full collection of all these key/value pairs.

Before the final output file for each mapper task is written, the output is partitioned based on the key and sorted. This partitioning means that all of the values for each key are grouped together.

In the case of the fairly basic sample application, there is only a single reducer, so all the output of the mapper task is written to a single file. But in cases with multiple reducers, every mapper task may generate multiple output files as well.

Map Slots Hadoop Software

The breakdown of these output files is based on the partitioning key. For example, if there are only three distinct partitioning keys output for the mapper tasks and you have configured three reducers for the job, there will be three mapper output files. In this example, if a particular mapper task processes an input split and it generates output with two of the three keys, there will be only two output files.

Always compress your mapper tasks’ output files. The biggest benefit here is in performance gains, because writing smaller output files minimizes the inevitable cost of transferring the mapper output to the nodes where the reducers are running.

The default partitioner is more than adequate in most situations, but sometimes you may want to customize how the data is partitioned before it’s processed by the reducers. For example, you may want the data in your result sets to be sorted by the key and their values — known as a secondary sort.

To do this, you can override the default partitioner and implement your own. This process requires some care, however, because you’ll want to ensure that the number of records in each partition is uniform. (If one reducer has to process much more data than the other reducers, you’ll wait for your MapReduce job to finish while the single overworked reducer is slogging through its disproportionally large data set.)

Using uniformly sized intermediate files, you can better take advantage of the parallelism available in MapReduce processing.